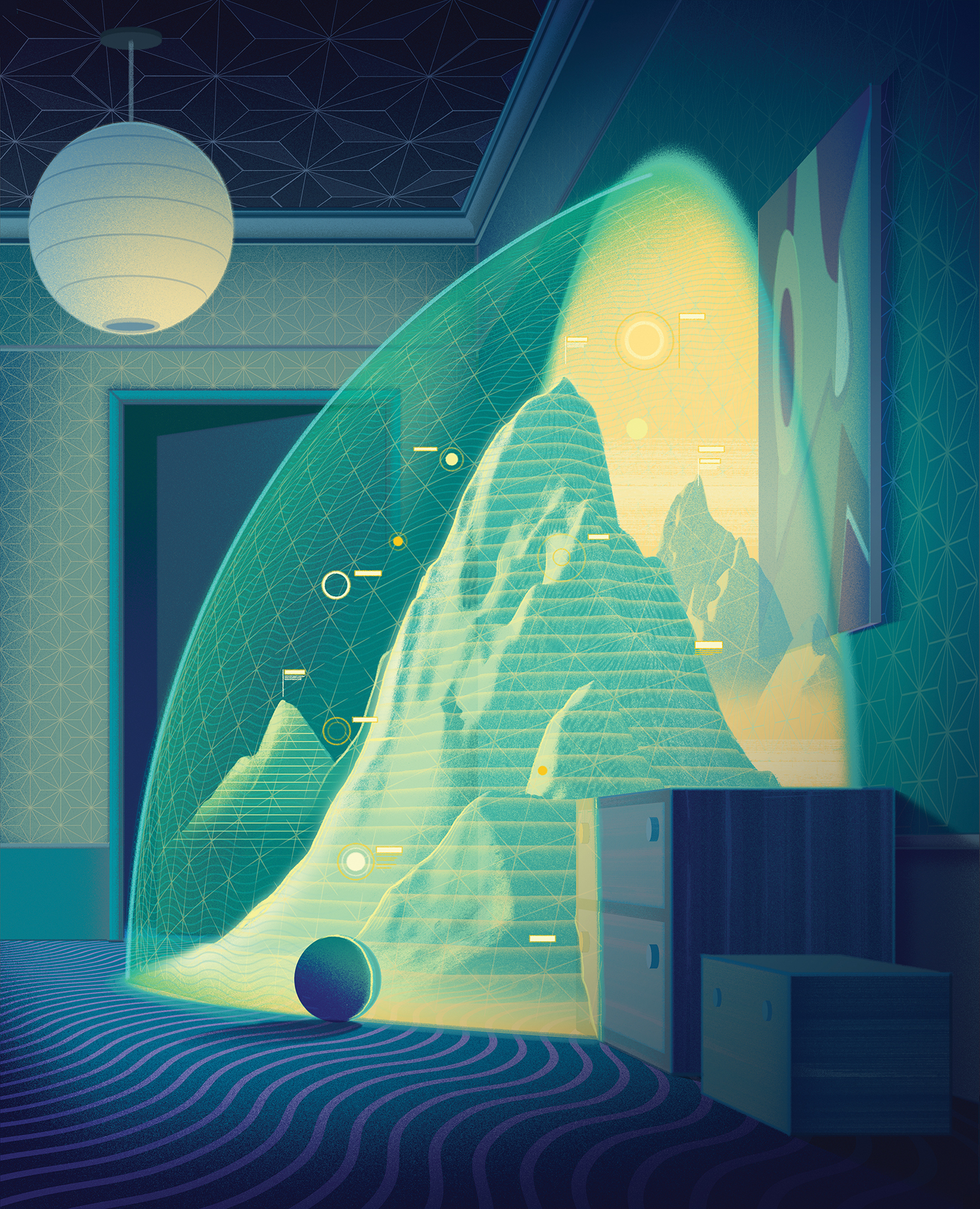

Virtual reality is here. In fact, it’s everywhere. Beyond video games, it is helping therapists to treat PTSD, allowing medical students to do virtual operations, and letting engineers test vehicle safety before the car is built.

But in order to provide a genuine experience through virtual reality (VR), manufacturers must first understand how vision works in the real world. And understanding a complex system like vision requires advances on multiple fronts. At Penn Arts and Sciences, psychologists and physicists are looking more closely at the basics of how we see.

“I’d like to understand how the world around us isn’t necessarily the thing inside our head.”

The human visual system acts a bit like the cash-starved manager of a baseball franchise. Limited on resources, he must decide what is most important for a winning season. Which approach will he take? Will he focus on pitchers or hitters?

Likewise, the human eye is constantly flooded with electromagnetic radiation, from which it must distinguish essential information like color, depth, and contrast. Photoreceptors in the flat retina transcribe the light they receive into electrical signals that the brain filters and manipulates into our perception of a vibrant, three-dimensional world.

Like any good baseball manager, Penn’s researchers are relying heavily on statistics. They select and characterize known features about our visual world, such as the RBG (red/blue/green) value of a dot on a screen or the precisely determined distance of an object in a photograph from the camera. The scientists plug these numbers into computer programs that crunch the values together to predict how the visual system will process the features. Researchers then compare the results to experiments that were done with human subjects. The divide between idealized models and human behavior provides visual neuroscientists with clues about how our brains process light to produce our perception of the world.

Vijay Balasubramanian, Cathy and Mark Lasry Professor of Physics and Astronomy, is interested in the basic science of vision, its neural biology, and the perceptions created by the visual system. “I’d like to understand how the world around us isn’t necessarily the thing inside our head,” he says.

Five years ago he started collecting photographs in an effort to figure out one question about the human visual system: Why is the retina equipped with so many dark spot detectors? He assembled a series of images of natural landscapes in Botswana, in areas unaltered by human intrusion and similar to where the human eye is thought to have evolved. Balasubramanian and collaborators David Brainard, RRL Professor of Psychology, and Peter Sterling, now an emeritus professor of neuroscience, analyzed the photos pixel by pixel to evaluate the amount of contrast that exists in our natural surroundings.

One would imagine that bright spots dominate the sun-covered terrain of Africa, but statistical analysis of the photos showed that in a “certain precise sense” the world has more dark spots than light. “Peter Sterling and I were able to build a fully quantitative physics-style theory of exactly what increase in proportion of your dark spot detectors would be best for your vision,” says Balasubramanian. “We explained from first principles why the visual system of animals devoted so many more resources to dark spots than to bright spots.”

According to Alan Stocker, Assistant Professor of Psychology, the phenomenon that Balasubramanian describes exemplifies a common theory in visual neuroscience: that an encoded trait in the visual system must be an evolutionary adaptation. “Neural resources are allocated according to what is more frequent in the world, more important,” says Stocker.

When Balasubramanian builds his models to study the visual system, he programs the computer to take this assumption into consideration. However, this is not the only approach to determining how the brain manages vision. Instead of viewing the problem from the perspective of how the brain adapted to optimize the visual system, Assistant Professor of Psychology Johannes Burge accepts what is in place and investigates the best way to use it.

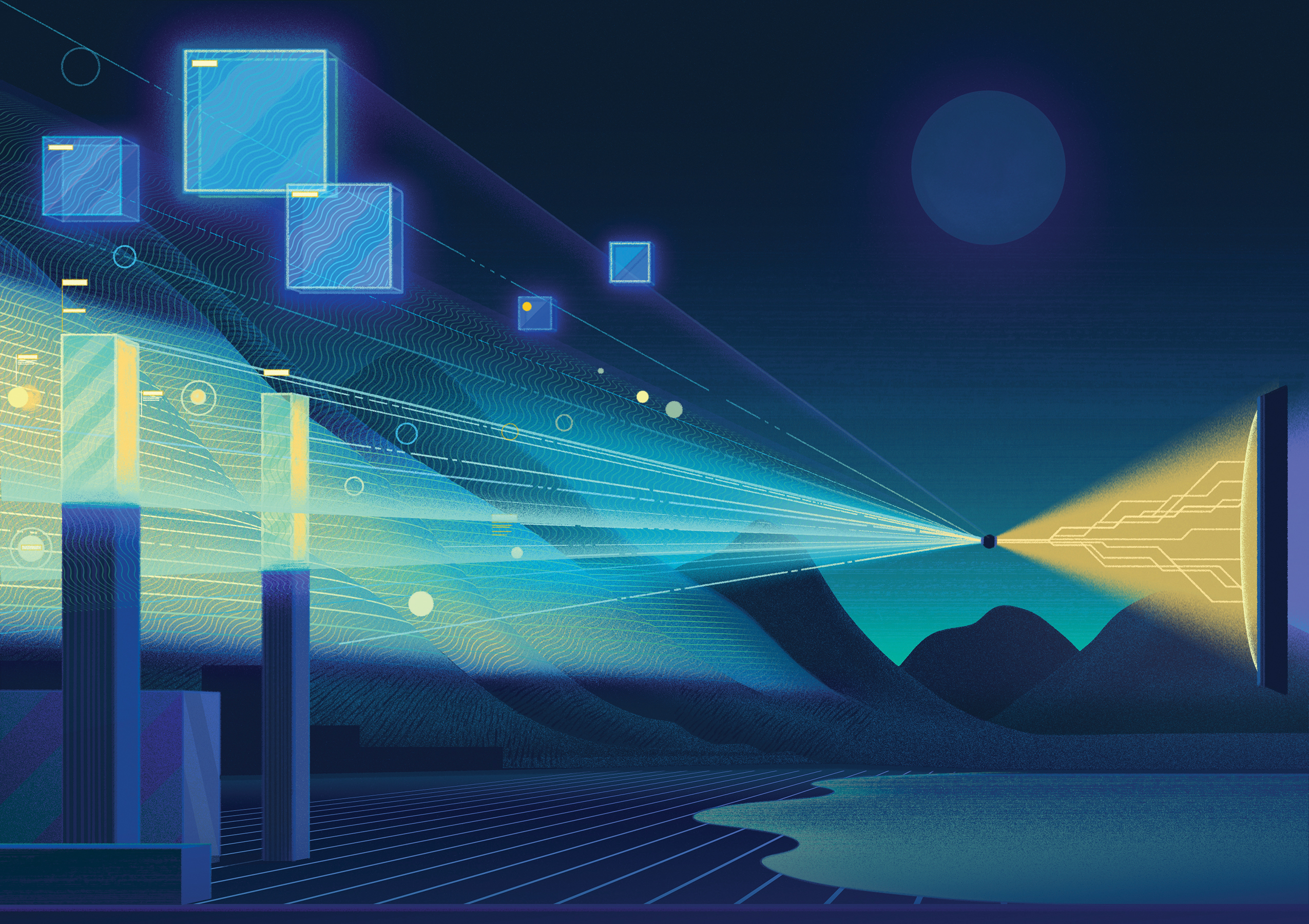

Like Balasubramanian, Burge uses statistics to calculate his computer models. Burge implements what’s called an “ideal observer,” a computer program designed to produce the best statistical estimates of what is in the world given the eye’s limitations. Recently he has applied his models to questions surrounding how the eye handles depth.

To do this, Burge first produced an accurate data set of depth measurements to feed into his computer model. By fitting a robotic scaffold with a laser scanner atop a camera, his group collected occlusion-free left-eye and right-eye photographs of natural landscapes in which every pixel has an accompanying known distance associated with it. Then he fed the image distance results into a computer to establish a true depth profile of the photos. Burge then exploited his ideal observer models to discover the best way for the eye to perform a task involving distance.

“We have the potential to revolutionize how autofocus mechanisms work in cameras.”

One such task is focusing. Picture your iPhone, says Burge. The camera focuses in a jerky procedure of guessing and checking to find the point of focus. Human eyes (or at least young human eyes), by contrast, zero in immediately on a target.

Using the ideal observer, Burge’s group calculated the statistically optimal way of determining focus, which turned out to be exactly how the human eye works, by exploiting properties of the light hitting the eye. Burge wants to know if we could design technology to do the same thing.

“We realized from studying natural images that there are some statistical properties within images that are relatively stable,” says Burge. To focus on a target, the human eye takes advantage of the way different wavelengths of light refract at different angles when passing through the cornea. Using image statistics, Burge found an analogous property in photographic images that could help a camera lens focus. “If you understand how best to perform a task using natural images then you can build it into imaging devices and robotics,” says Burge.

Modeling the visual system in this abstract way can lead to valuable applications in visual technologies. “We have the potential to revolutionize how autofocus mechanisms work in cameras,” says Burge.

If they can understand vision at that level, researchers could unlock remarkable technologies like prosthetic eyes or robots with human-like vision.

Robust models of the visual system are also critical. Alan Stocker developed a method that has proven to be influential in the field of visual neuroscience. He blends the statistics of Balasubramanian’s evolution-based approach while also considering Burge’s emphasis on how the eye performs with the system it has in place.

“The visual system has adapted in both ways, not only in the way it interprets the truth from the information but also in the way it represents the information in our minds,” says Stocker. His model has proven to be quite effective in explaining the visual system’s biases in perceptual inference.

When humans think they see an object, they inherently complete a mental picture of the object. “When I see an object, if I think it’s an apple then the features have to be consistent with that notion,” says Stocker. “We don’t perceive colors or shapes individually, we perceive them as parts of a whole.”

All these examples of the research conducted by these professors have the potential to enhance a virtual experience. “Virtual reality is about how you create perceptions of things and the tricks that you can use to manipulate the visual environment to create this perception,” says Balasubramanian. “If you understand those tricks you can use them to your advantage as a virtual reality engineer.”

David Brainard’s group is taking an indirect approach to the vision computation problem. Brainard is director of the Vision Research Center and co-director of the Computational Neuroscience Initiative at Penn. As well as working extensively to determine more about our perception of color, he has contributed to a number of the research efforts on visual neuroscience. He’s currently in the discovery stage on an experiment to create a fully computable model of the human eye. Brainard says, “Our motivation was to make the modeling more neurally realistic, so that we can ask questions such as how well can we train an artificial system embodying a precise model of the early visual system to accurately perceive object color despite variations in illumination.”

“Although the advances in computing capability have made these new approaches possible, one of the big challenges with virtual reality is that faithfully recreating the images present on the back of the eye requires way more computing power and bandwidths than even the best computers have right now,” says Burge. A complimentary branch of vision research aims to directly understand the brain’s computations.

Assistant Professor of Psychology Nicole Rust measures the electrical pulses of neurons in the brain as her subjects search through images to find one that matches a specific target object. After recording these pulses, her team looks to see how the visual information was processed in the brain by using those signals to recreate the task of their subject. “We are considering the patterns of responses across a population of these nerve cells and we are looking to see how these patterns change with different variables in these experiments,” says Rust. She found that when a subject observed a target match, there is a distinct pattern of neuron activity different from when they were looking at other images.

Balasubramanian also conducts experiments to directly measure neural signals as they leave the retina. The aim of the direct measurements carried out by Rust and Balasubramanian is to identify the algorithms used to transcribe visual information to the brain. If they can understand vision at that level, researchers could unlock remarkable technologies like prosthetic eyes or robots with human-like vision.

“We are unquestionably taking advantage of the power of computers,” says Rust. “There is very little that I do that I could analyze in an Excel spreadsheet.”

But as Burge points out, for all their improvements in capability, a computer cannot outperform the processing power of a human brain. The tasks that the visual system allows the brain to perform are remarkable when you think about the limitations weighted against its success.

“A lot of the job of the visual system is actually to throw away information, because you have an incredible amount coming to the eye from all the photons everywhere all the time,” says Balasubramanian. “In the end you have to whittle this information down to whatever it takes to perfectly swing a bat, just now, to hit a ball.”